News

Article

AI in Psychiatry: Things Are Moving Fast

Author(s):

AI is evolving and changing the landscape of medicine and psychiatry. Here’s how clinicians can understand AI’s potential for psychiatry as well as its limitations.

Blue Planet Studio_AdobeStock

Artificial intelligence (AI) refers to computer systems that perform tasks that typically require human intelligence. These can include learning from experience, problem-solving, speech or language understanding, and even decision-making. Large language models (LLMs) are a specific type of AI designed for understanding and generating natural language.

These models are pre-trained on vast amounts of diverse text data, allowing them to perform language-related tasks such as text completion, summarization, translation, question-answering, and more. Given a prompt or context, the LLM can generate fluent, coherent, human-like language responses.

Generative Pre-Trained Transformer (GPT) is an LLM developed by OpenAI, Inc. There have also been rapid enhancements in the models since OpenAI released GPT-1 in 2018, leading to better complex reasoning and cohesion. The model’s learning parameters grew from just 110 million (GPT-1, 2018) to 175 billion (GPT-3, 2020).1,2

Launched on March 14, 2023, GPT-4 is the latest model in the GPT series from OpenAI that is thought to have been trained on more than 1 trillion parameters. It boasts more advanced reasoning and greater ability to handle more complex instructions, and it is 40% more likely to produce factual responses than GPT-3.5.3 However, GPT is limited in that it can continue to return biased, inappropriate, and inaccurate responses.

Revolutionary Potential of AI and Generative Language Models

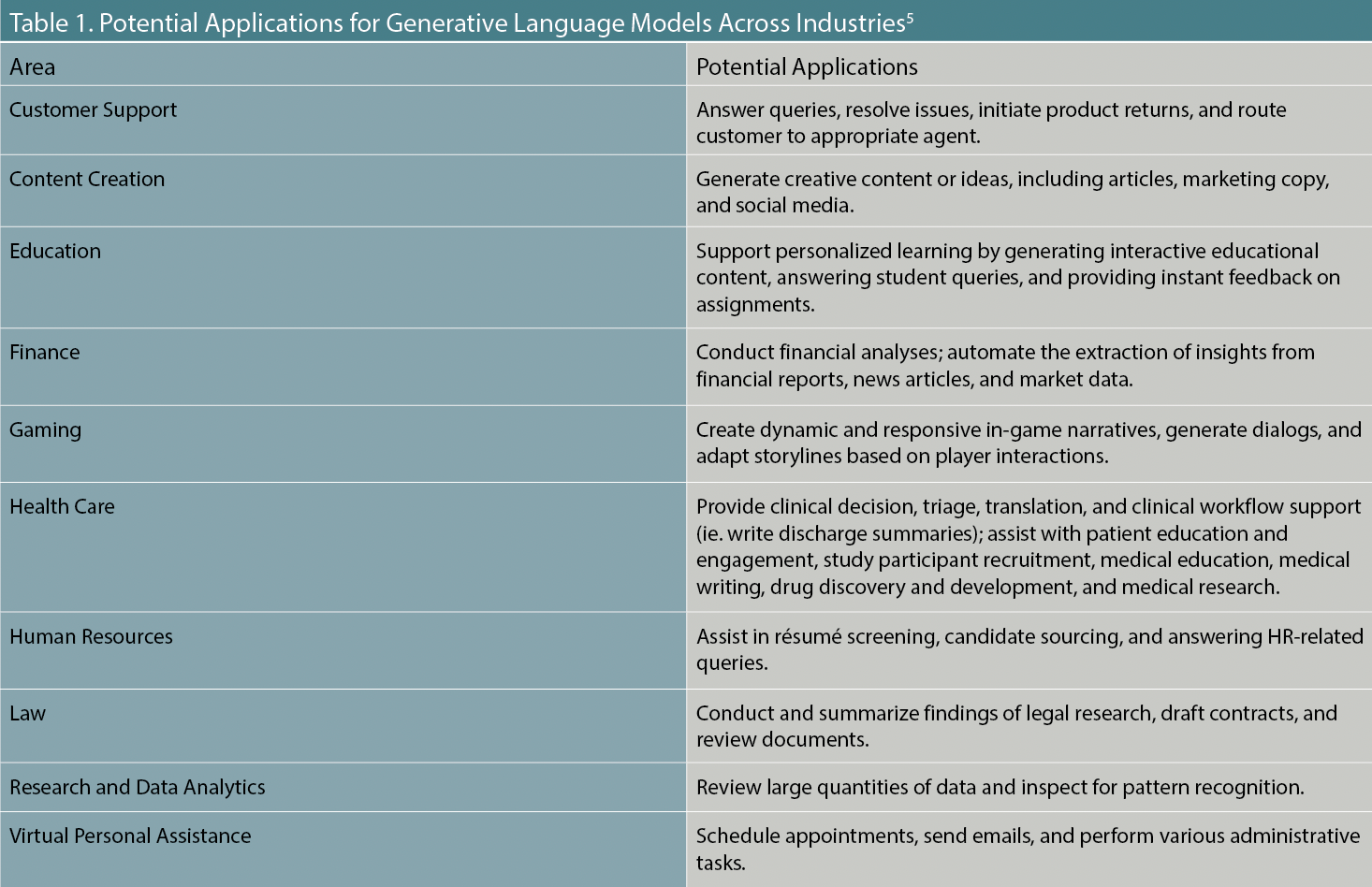

A 2023 report from McKinsey estimates that generative AI’s impact on productivity could add the equivalent of $2.6 trillion to $4.4 trillion annually.4 LLMs have the potential to transform a variety of industries. From automating and personalizing e-commerce customer support tasks to quickly analyzing and summarizing large volumes of legal text, the applications for enhancing productivity are extraordinary (Table 1).5

Table 1. Potential Applications for Generative Language Models Across Industries5

Applications in Psychiatry

Like many industries and medical specialties, the application of AI and LLMs in psychiatry could transform the ways patients and providers receive and deliver care, respectively. Despite training on general data, ChatGPT performs many medical and health care tasks well.6 Mental health clinicians may look to AI- and LLM-powered tools to help reduce administrative burden and provide clinical decision support. Patients may benefit from these tools for education, self-care, communication with their care teams, and more.

The industry is optimistic in its applications, as studies have shown high accuracy for potential applications in clinical decision support. Deep learning models have shown high accuracy in predicting mental health disorder and severity.7 ChatGPT achieved final diagnosis accuracy of 76.9% (95% CI 67.8%-86.1%) in a study of 36 clinical vignettes.8

However, it is important to note that this study was carried out in an experimental environment with a small case sample. These vignettes may not truly approximate a patient’s real-world clinical presentation or the text input a user would naturally enter into the chatbot interface.

Several organizations are piloting the application of ChatGPT, and outcomes of real-world deployment in clinical settings are missing.6,9 However, real-world clinical utilization of AI has not been without controversy. A little-known mental health app went viral when it replaced human responses with AI without telling patients.10

The capabilities of the technology are evolving rapidly, and utilization and ethics must play catchup. Although accuracy increases and nonsensical, confabulated outputs are reduced with each subsequent model enhancement, major limitations and concerns with the tool persist.11

Key Limitations of Generative Language Models

Despite the enthusiasm for the potential impact AI can make in psychiatry, the industry is cautious and slow to implement the technology, given its limitations. Although there are many potential limitations, common concerns include accuracy, quality, and transparency of training data; confabulated outputs (“hallucinations”); and biases.

Diagnosis accuracy can be high on vignette studies—however, these rates dip when case complexity rises. One clinical vignette study showed that ChatGPT-4 achieved 100% diagnosis accuracy within the top 3 suggested diagnoses for common cases, while human medical doctors solved 90% within the top 2 suggestions, but did not reach 100% with up to 10 suggestions.

For rare cases, only 40% are solved within the first diagnosis suggestion and it takes 8 or more suggestions to achieve 90% diagnosis accuracy. By contrast, medical doctors approached 50% accuracy within the top 2 suggestions.12

Transparency, quality, and contemporary training data remains a challenge. ChatGPT was trained on a large corpus of text data, such as web pages and books.2 If the GPT model does not handle high- and low-quality evidence differently, this can lead to incorrect or inaccurate outputs.13 With billions of parameters, model retraining requires intensive, expensive computation.11 ChatGPT-3.5 (the free version currently available to the public) uses training data from up to September 2021, and it cannot access or leverage real-time data and events from after this date.14

LLMs can generate confabulated outputs known as hallucinations. Hallucinations are particularly concerning in medical settings, where incorrect information may have implications for morbidity and mortality. ChatGPT may make a correct choice but give nonsensical explanations. In 1 study, ChatGPT constructed false references within a research paper.15

In another study, when asked to answer a clinical question about the feasibility, safety, and effectiveness of an implantable cardioverter-defibrillator (ICD), ChatGPT referred to 2 different studies that do not exist.16 ChatGPT may also hallucinate medical knowledge or mathematical deductions.11

Biases from the training data—like those regarding gender, race, and culture—make ChatGPT capable of generating stereotyped and prejudiced content.2,17 In its initial release, when asked to identify who would be a good scientist based on race and gender, ChatGPT said a “white male” and responded negatively for all other subgroups.18

Combatting Limitations With Custom GPTs

In November 2023, OpenAI introduced custom GPTs as a powerful new feature of their flagship product, ChatGPT. The custom GPT feature allows individual users to tailor the large language model to their specific parameters using plain language prompts. This new feature allows users to input datasets and resources while also telling the custom GPT which references should be utilized in responses.

In medicine, AI has demonstrated promise in its ability to correctly answer medical questions, but what currently limits wider adoption in clinical decision-making is the risk of hallucinations and inaccurate answers.

Large language models do not differentiate between the validity of their sources, but rather aggregate the best response using word patterns. In medicine, a response generated by a peer-reviewed journal reference is considered higher quality and more reliable and very different from one generated by sources in an internet chat room. The custom GPT builder works to address this issue.

Methods

Neuro Scholar, a custom GPT on the OpenAI ChatGPT platform, was created with a focus on neuroscience and psychiatry. Neuro Scholar was instructed to communicate in a formal tone, reflecting an academic and professional nature suitable for discussing complex, evidence-based topics in neuroscience and psychiatry. It is designed to ask clarifying questions when needed to ensure accurate and relevant responses.

Neuro Scholar focuses on providing comprehensive, evidence-based information on neuroscience and psychiatry topics, strictly adhering to scientific literature that was uploaded to the reference collection. The model was also instructed to notify the user if the answer was not available in the uploaded resources and to warn the user if generalized knowledge (references outside the uploaded dataset) was utilized.

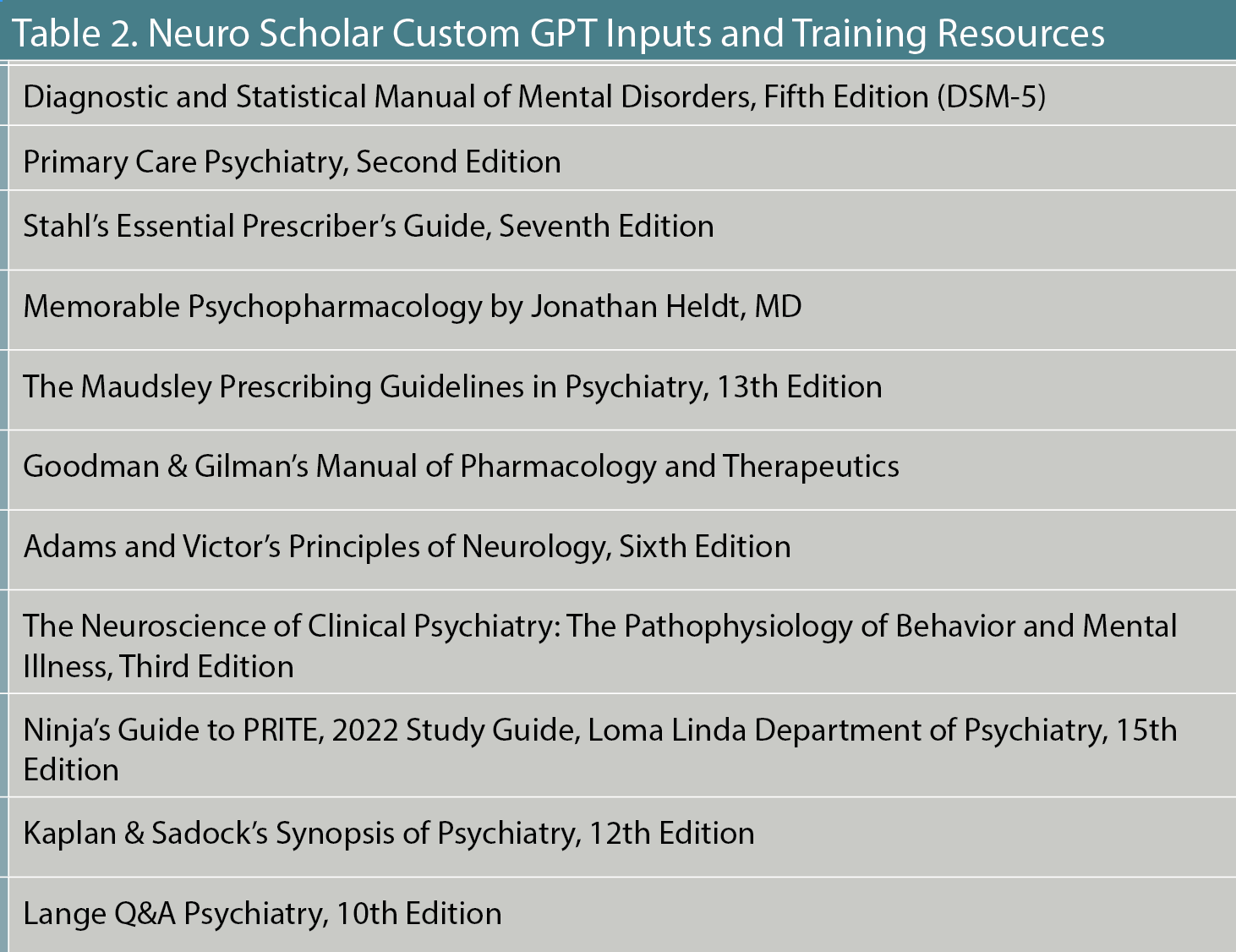

The Neuro Scholar reference collection includes textbooks and other resources that encompass a wide range of topics in neuroscience, psychiatry, and related fields. The uploaded resources used to build this custom GPT are listed in Table 2.

Table 2. Neuro Scholar Custom GPT Inputs and Training Resources

To test the accuracy of Neuro Scholar, a standardized practice exam for the American Board of Psychiatry and Neurology was selected. Practice Exam 1 of The Psychiatry Test Preparation and Review Manual, Third Edition, consisted of 150 questions. The practice exam was administered to Neuro Scholar and ChatGPT-3.5.

Results

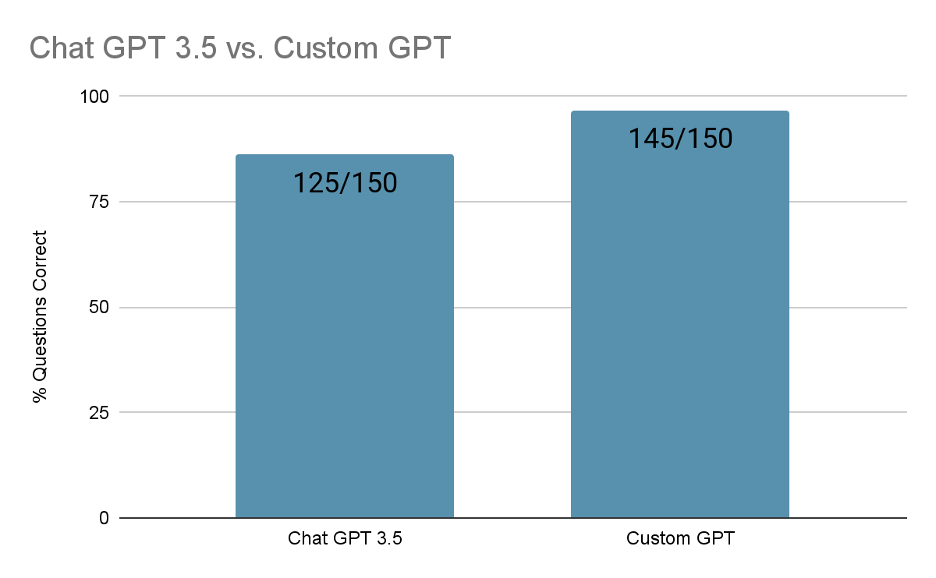

ChatGPT-3.5 correctly answered 125 out of 150 questions, while Neuro Scholar correctly answered 145 out of 150 questions, achieving 96.67% accuracy on the practice exam (Figure).

Figure. ChatGPT 3.5 vs Neuro Scholar Custom GPT Performance in the ABPN Practice Exam

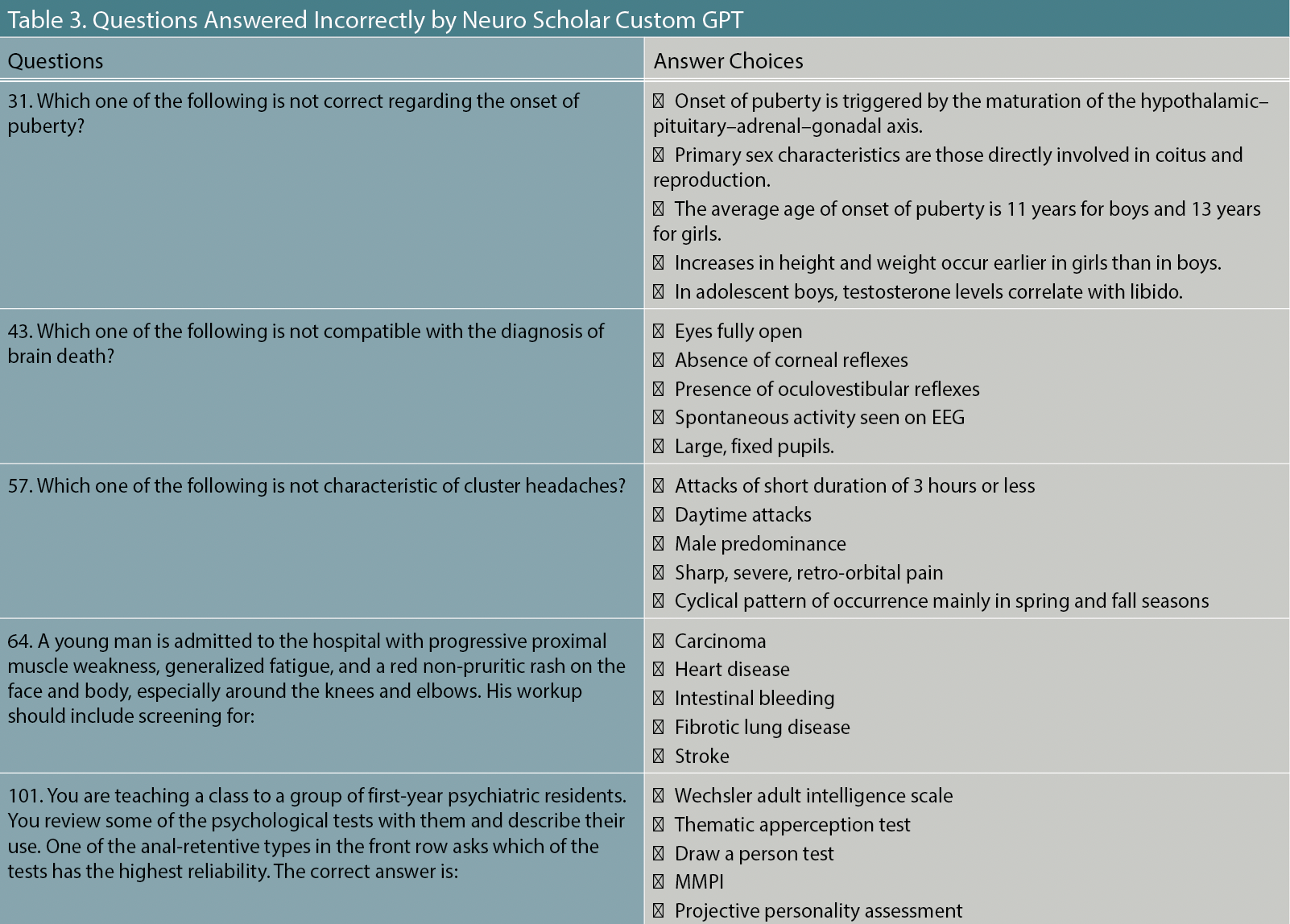

The 5 questions that Neuro Scholar answered incorrectly are listed in Table 3; ChatGPT-3.5 also missed these questions.

Table 3. Questions Answered Incorrectly by Neuro Scholar Custom GPT

Discussion

In just 1 year, AI and large language models have rapidly evolved and are reaching a tipping point with regards to educational and clinical viability. The above proof-of-concept experiment demonstrates that customized generative AI can improve accuracy and reduce hallucinations through control of which resources the model utilizes. In medicine, AI hallucinations can have disastrous consequences. Efforts to improve AI accuracy must also include efforts to eliminate inaccurate responses.

This proof-of-concept experiment also highlights concerns regarding intellectual property ownership within AI models. The Neuro Scholar custom GPT was built using multiple psychiatry textbooks that were acquired through the secondary market.

Recently, OpenAI announced a partnership with publisher Axel Springer.19 This partnership will formalize OpenAI’s use of the publisher’s content as resources for generative responses. As AI continues to evolve, further guidance will be needed regarding the use of copyrighted materials such as journal articles, textbooks, and reference guides.

Plain language programing of custom AI models carries many exciting implications in the world of psychiatry. In medical education, students can study by using AI prompts with responses that are focused on the specific learning concept. As a tool for clinical support, custom AI models can be configured to provide algorithms that reference all available treatment guidelines for a respective condition. Lastly, industry within psychiatry can harness these models to provide medical affairs information while controlling the specific resources referenced.

Concluding Thoughts

AI was the transformative business development of 2023, and it will continue to evolve and change the landscape of medicine and psychiatry. As clinicians, it is imperative for us to understand the potential of AI in psychiatry, but also to understand and remain wary of its limitations. AI may help psychiatry address chronic problems including adherence to treatment guidelines, access shortages, and didactic education across multiple professions and training settings.

Mr Asbach is a psychiatric physician associate and serves as associate director of interventional psychiatry at DENT Neurologic Institute. Ms Menon is an independent researcher who is currently freelancing in advisory and contract roles developing clinical AI products. Mr Long is a PA student at Daemen University in Amherst, New York.

References

1. Cheng SW, Chang CW, Chang WJ, et al. The now and future of ChatGPT and GPT in psychiatry. Psychiatry Clin Neurosci. 2023;77(11):592-596.

2. Brown T, Mann B, Ryder N, et al. Language Models Are Few-Shot Learners; 2020.

3. GPT-4 is OpenAI’s most advanced system, producing safer and more useful responses. OpenAI. Accessed December 15, 2023. https://openai.com/gpt-4

4. The economic potential of generative AI: the next productivity frontier. McKinsey Digital. June 14, 2023. Accessed December 30, 2023.https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier#introduction

5. Sallam M. ChatGPT utility in healthcare education, research, and practice: systematic review on the promising perspectives and valid concerns. Healthcare (Basel). 2023;11(6):887.

6. Li J, Dada A, Kleesiek J, Egger J. ChatGPT in healthcare: a taxonomy and systematic review. Preprint. March 30, 2023. Accessed December 30, 2023. https://www.medrxiv.org/content/10.1101/2023.03.30.23287899v1

7. Allesøe RL, Thompson WK, Bybjerg-Grauholm J, et al. Deep learning for cross-diagnostic prediction of mental disorder diagnosis and prognosis using Danish nationwide register and genetic data [published correction appears in JAMA Psychiatry. 2023 Jun 1;80(6):651]. JAMA Psychiatry. 2023;80(2):146-155.

8. Rao A, Pang M, Kim J, et al. Assessing the utility of ChatGPT throughout the entire clinical workflow: development and usability study. J Med Internet Res. 2023;25:e48659.

9. Diaz N. 6 hospitals, health systems testing out ChatGPT. Becker’s Health IT. June 2, 2023. Accessed December 30, 2023. https://www.beckershospitalreview.com/innovation/4-hospitals-health-systems-testing-out-chatgpt.html

10. Ingram D. A mental health tech company ran an AI experiment on real users. nothing’s stopping apps from conducting more. NBC News. January 14, 2023. Accessed December 30, 2023. https://www.nbcnews.com/tech/internet/chatgpt-ai-experiment-mental-health-tech-app-koko-rcna65110

11. Wang Y, Visweswaran S, Kappor S, et al. ChatGPT, enhanced with clinical practice guidelines, is a superior decision support tool. Preprint. August 13, 2023. Accessed December 30, 2023. https://www.researchgate.net/publication/373190004_ChatGPT_Enhanced_with_Clinical_Practice_Guidelines_is_a_Superior_Decision_Support_Tool

12. Mehnen L, Gruarin S, Vasileva M, Knapp B. ChatGPT as a medical doctor? a diagnostic accuracy study on common and rare diseases. Preprint. April 26, 2023. Accessed December 15, 2023. https://www.researchgate.net/publication/370314255_ChatGPT_as_a_medical_doctor_A_diagnostic_accuracy_study_on_common_and_rare_diseases

13. Mello MM, Guha N. ChatGPT and physicians' malpractice risk. JAMA Health Forum. 2023;4(5):e231938.

14. Models. OpenAI. Accessed December 30, 2023. https://platform.openai.com/docs/models

15. Alkaissi H, McFarlane SI. Artificial hallucinations in ChatGPT: implications in scientific writing. Cureus. 2023;15(2):e35179.

16. Siontis KC, Attia ZI, Asirvatham SJ, Friedman PA. ChatGPT hallucinating: can it get any more humanlike? Eur Heart J; 2023.

17. Ray PP. ChatGPT: a comprehensive review on background, applications, key challenges, bias, ethics, limitations and future scope. Internet of Things and Cyber-Physical Systems. 2023;3(1):121-154.

18. Singh S, Ramakrishnan N. Is ChatGPT biased? a review. Preprint April 2023. Accessed December 30, 2023. https://www.researchgate.net/publication/369899967_Is_ChatGPT_Biased_A_Review

19. Partnership with Axel Springer to deepen beneficial use of AI in journalism. OpenAI. December 13, 2023. Accessed December 30, 2023. https://openai.com/blog/axel-springer-partnership

Newsletter

Receive trusted psychiatric news, expert analysis, and clinical insights — subscribe today to support your practice and your patients.