News

Article

Attention to Attention is What You Need: Artificial Intelligence and Medicine

Author(s):

Here's how medical practitioners and researchers can influence AI reasoning and influence in the future of AI development and deployment.

Tierney_AdobeStock

In just a few months, artificial intelligence (AI) has certainly exploded onto the stage in a way that has surprised many. Take, for instance, the mass popularity of Chat GPT, GPT-3, GPT-2, and BERT. The scale and intelligence, with the advancement of computing power with large data sets, provide fertile ground for AI to take off.1,2

For us in medicine, we are used to applying approaches to diagnosis and treatment that are rooted in deep understanding of disease processes and informed by critical appraisal of evidence-based strategies and experience over time. Medicine has adapted and kept pace with the various emerging technologies and, as a field, has reached many advances.3 Part of the heuristic and epistemological approach is that technology has always been a tool to be applied to the medical process.4

Agency and control have been at the forefront of how we use tools. However, with the introduction of tools, there was some initial trepidation. When one looks, for example, at the evolution of different tools over time, in some ways, every tool has brought on some initial anxiety and fear. One can only imagine the angst of a painter with the emergence of photography, and yet, painting and art have not been displaced.

The emergence of AI has generated much for even those embedded in the technological field. An approach to machine learning and artificial control intelligence should probably stem from an understanding of what it is and what it can do. In taking this approach, we are positioning ourselves in a way to inform industry and help solve problems that are meaningful with an ethical and value-based framework.

The emergence of technology and its adoption in society has brought on various emotions in its adaptation. A number of researchers have explored this area . One particular Model is Gartner's Hype Cycle, whereby new technologies are followed by an up-peak of excitement, followed by a disillusionment phase, and then a normalization phase where one understands the utility and limitations of the new tool.

Another heuristic to understand emerging technology is through an economic perspective. The Kondratiev Wave theory describes economic cycles in the economy and links them with technology. Another researcher in the field of paradigm shifts, Carlota Perez, defines technological revolution as a powerful and highly visible cluster of new and dynamic technologies, products, and industries capable of bringing about an upheaval in the whole fabric of the economy and propelling a long-term upsurge of development.

It is quite astounding that a machine can read large amounts of data and emulate and identify patterns, but, at its heart, not quite understand what it is doing. So, although technology can incorporate an immense amount of knowledge that is often cultivated over many years in a rapid time, it still has challenges with reasoning.

For us in the medical world, it is hard to imagine a system that emulates what we do: Refine the diagnostic process and apply knowledge to patterns based on genetics, epigenetics, life experiences, and responses to various medication therapies, and then fine-tune this to each patient while seeing it from the individual’s perspectives and values.

So, one may ask, what is the concern? A recent letter from several technology leaders spoke to the concerns around the rapid deployment of AI.5

In some ways, these technological innovations have always had human beings behind the controls. What is currently challenging and concerning for various individuals, including those in the fields of computer science and engineering, though, is the lack of clarity with which the machine itself can reason and the risk that this can pose. However, although the genie is out of the lamp, we can try to position ourselves at the front and center of the decision-making process and help inform innovators, inventors, and data scientists.

Much of the machine learning model is based on teaching the machine how to learn and reason, drawing from a number of mathematical models. In order to understand the underlying AI technology, it is helpful to take a closer look at how AI models are structured.

Machine Learning Models: Recurrence and Convolution Transformers

Recurrence and convolution transformers are 2 important concepts in AI that have been widely used in machine learning models. Recurrence helps models remember what happened before, whereas convolution finds important patterns in data and transformers focus on understanding relationships between different parts of the input.

Recurrence

Think of recurrence as a memory that helps a model remember information from previous steps. It is useful when dealing with things that happen in a specific order or over time. For example, if you are predicting the next word in a sentence, recurrence helps the model understand the words that came before it. It is like connecting the dots by looking at what happened before to make sense of what comes next.

Convolution

Convolution is like a filter that helps the model find important patterns in data. It is commonly used for tasks involving images or grids of data. Just like our brain focuses on specific parts of an image to understand it, convolution helps the model focus on important details. It looks for features like edges, shapes, and textures, allowing the model to recognize objects or understand the structure of the data.

Transformers

Transformers are like smart attention machines. They excel in understanding relationships between different parts of a sentence or data without needing to process them in order. They can find connections between words that are far apart from each other. Transformers are especially powerful in tasks like language translation, where understanding the context of each word is crucial. They work by paying attention to different words and weighing their importance based on their relationships.

How Transformers Became So Impactful

A landmark 2017 paper on AI titled, “Attention Is All You Need” by Vaswani and colleagues6 laid important work in understanding the transformer model. Unlike recurrence and convolution, the transformer model relies heavily on the self-attention mechanism. Self-attention allows the model to focus on different parts of the input sequence during processing, enabling it to capture long-range dependencies effectively. Attention mechanisms allow the model-to-model dependencies between input and output sequences without considering their distance. This allows the machine incredible advanced capabilities, especially when powered with advanced computing power.

Machine Learning Frameworks

Currently, there are several frameworks that can be applied to the machine learning process:

- CRISP-DM: This is a popular framework for the machine learning process and stands for cross-industry standard processing for data mining and analytics.

- MADlib: This is an open-source library for scalable in-database analytics that stands for “magnetic, awesome data library.”

- FACES: This acronym stands for feature engineering, algorithm selection, cross-validation, evaluation, and selection, remembered by “put on your best FACES for machine learning.”

- TEAMS: This acronym stands for task definition, exploratory data analysis, algorithm selection, and model evaluation.

- PIPE: This stands for problem definition, information gathering, pre-processing, and evaluation.

The CRISP-DM approach involves about 8 phases:

- Business understanding: The goals and objectives of the project are defined, and the problems are addressed. Those of us in the medical field can provide valuable insights. We might be able to identify a problem in patient care that could be addressed with data analytics, such as reducing remission rates or improving a particular outcome. For example, if a hospital is trying to reduce the rate of readmissions among patients with heart failure, physicians might inform the project by identifying the most common causes of readmissions and the factors that contribute to poor outcomes, such as medication adherence and follow-up.

- Data understanding: The required data is collected, explored, and analyzed. This includes understanding the data source, data quality, and data structure. Clinicians can provide expertise in clinical data, such as medical codes, lab results, and electronic health records. They can also help by identifying potential data sources and providing insights into the quality and structure of the data. Some practical examples of this include understanding relevant data such as patient demographics, vital signs, and lab results, and providing insight into the completeness of data, potential bias, or confounding factors that might affect the analysis.

- Data preparation: In this phase, data is analyzed, cleaned, transformed, and formatted for analysis. This includes tasks such as data integration, data selection, and data transformation. This involves ensuring that the data is analyzed in a clinically relevant way, that the data from these different sources is integrated effectively, and that the data is properly selected and transformed to ensure its suitability for analysis, while identifying missing data, helping identify outliers or data points that may skew the analysis, and making recommendations accordingly.

- Modeling: The data is modeled using various techniques such as regression analysis, clustering, and decision trees to identify patterns and relationships in the data and to make predictions. Applying medical insights into the selection of appropriate models and algorithms. For example, we might recommend certain models for predictive modeling or machine learning that have proven effective in clinical settings. Physicians may provide expertise in clinical decision-making and choose the most appropriate modeling techniques. They can also provide guidance on the interpretation of the results and how to apply them to clinical practice.

- Some approaches for the next step might be:

- Feature selection: Select the most relevant features or variables to include in the model (age, gender, comorbidities, etc).

- Algorithm selection: It may help to select the best algorithms for analysis, as different algorithms may be better suited for different types of data and clinical questions. Decision trees may be useful for some subgroups of patients, while logistic regression might be useful in predicting the probabilities in others.

- Clinical interpretability: Help determine which models are more clinically interpretable and easier to translate into clinical practice. An example is defining the cutoff for risk scores such as in the case of the Framingham Risk Score rules and determining appropriate interventions.

- Evaluation: Help evaluate the models, determine clinical relevance and accuracy, and identify any potential biases or limitations in analysis. For example, evaluate how to use sensitivity and specificity analysis to determine how well the model performs and identify patients at risk. This also includes identifying any potential biases or limitations in analysis, such as differences in patient populations and care settings.

- Clinical relevance: Are these clinically relevant outcomes? Does this have an impact on clinical decisions and patient outcomes (ie, additional care)?

- Model accuracy: Compare predicted outcomes to actual outcomes using tools of sensitivity, specificity, and area under the curve (AUC) to assess the performance of models.

- Model validation: Use cross-validation, which splits data into training and testing datasets, to see if the model can generalize to new data. Or, conduct external validation strategies by applying data to a new dataset and assessing its performance to see if it performs well according to different patient populations or care settings.

- Bias and fairness: Is the model fair and unbiased? Can it apply across different groups?

- Deployment: In this phase, the models are deployed into the operational environment, and the project is finalized. This includes documenting the project, ensuring the models are integrated into the existing system, and ensuring that they are used appropriately. One can also monitor the performance of the models over time and adjust as needed. Make sure the models are able to be implemented into clinical practice appropriately—for example, incorporating the risk score into the electronic health record, alerting the care team when someone is off track in their care mission, and monitoring the performance of goals over time.

- Final evaluation: The models are evaluated and tested to determine their accuracy and effectiveness. This includes comparing the results of different models and selecting the best one. There is an opportunity to help interpret the results of the models in a clinically meaningful way and identify any potential biases or limitations.

Concerns With AI

In medicine and psychiatry, we are familiar with distortions that can arise in human thinking. We know that “thinking about what we are thinking about” becomes an important skill in training the mind. In AI, the loss of human control and input in informing the machines is at the heart of many concerns. There are several reasons for this.

- Complex systems: AI machines are complex systems to understand. The underlying mechanisms are highly complex and difficult to interpret, which makes it hard to understand some of the reasoning behind the decisions.

- Data dependence: AI models heavily rely on large amounts of training data to learn patterns and make accurate predictions. Insufficient or biased data can lead to skewed results and reinforce existing biases present in the data, perpetuating social inequalities and discrimination.

- Transparency: There is a lack of transparency, which raises concerns about accountability, fairness, and potential biases in AI-generated outcomes.

- Jobs and skills: As AI technologies advance, there is a concern about the potential impact on the job market. Automation driven by AI may lead to significant job displacement, requiring society to adapt to changing employment dynamics and develop new skill sets.

- Unintended consequences: AI systems are designed to optimize specific objectives, but may exhibit unintended behaviors or biases that were not explicitly programmed. These unintended consequences can have real-world implications.

- Ethical considerations: AI raises several ethical concerns, including issues related to privacy, security, and consent. AI systems have access to vast amounts of personal data, which can be misused or compromised.

Addressing these concerns requires a comprehensive approach that emphasizes transparency, accountability, fairness, and human oversight in the development and deployment of AI systems. It is crucial to consider the societal impact of AI and to establish regulations and guidelines that ensure its responsible and ethical use.

Positives and Negatives in the Medical Community

For the medical community specifically, this new technology brings both positives and negatives. By leveraging the potential of AI while addressing its limitations and concerns, health care can benefit from improved diagnostics.

Positive aspects:

- Enhanced diagnosis and treatment: AI can analyze vast amounts of medical data, including patient records, imaging scans, and research papers, to help health care professionals make accurate diagnoses and develop personalized treatment plans. AI algorithms can identify patterns and detect early signs of diseases, potentially improving patient outcomes.

- Efficient health care delivery: AI has the potential to streamline health care processes, such as automating administrative tasks, optimizing resource allocation, and reducing wait times. This efficiency can lead to cost savings and improved access to care for patients.

- Precision medicine: By leveraging AI algorithms, medical professionals can analyze genetic and molecular data to identify targeted treatments and predict patient responses. This approach enables personalized medicine, or tailoring interventions based on an individual’s unique characteristics, which leads to more effective therapies and better patient outcomes.

- Decision support systems: AI-powered decision support systems can provide clinicians with evidence-based recommendations, drug interactions, and treatment guidelines, aiding in complex decision-making processes. This assistance can improve diagnostic accuracy, reduce medical errors, and enhance patient safety.

Negative aspects:

- Lack of human oversight: Overreliance on AI systems may diminish the role of health care professionals, potentially leading to complacency or the loss of critical thinking skills. It is important to maintain human control and ensure that AI algorithms are used as tools to support, rather than replace, medical expertise.

- Ethical considerations: The use of AI in health care raises ethical concerns regarding privacy, security, and consent. Patient data privacy must be protected, and measures should be in place to ensure the secure storage and transmission of sensitive medical information. Additionally, the deployment of AI systems in sensitive areas, such as end-of-life decisions and mental health assessments, requires careful consideration of ethical implications.

- Interpretability and transparency: Some AI models, particularly deep learning models, can be challenging to interpret, making it difficult for medical professionals to understand the reasoning behind AI-generated predictions. Ensuring transparency and explainability of AI algorithms is crucial for gaining trust and acceptance from clinicians.

- Data bias and generalizability: AI algorithms heavily rely on training data, which may introduce biases if not adequately addressed. Biased data can lead to inaccurate or discriminatory results, particularly for underrepresented patient populations. Efforts should be made to ensure diverse and representative training datasets to inform policymakers and AI developers.

Evaluating AI Technology

A proposed mechanism for physicians and health care workers to evaluate technology might be a framework similar to what we have identified as an evidence-based tool. Here are some guiding questions for evaluating the technology:

- Is the AI doing good work?

- Is there any harm?

- Is there an approach to address fairness, bias, and error?

- How does the technology work?

- What inputs have been applied to help the model with its reasoning?

- How can we reduce biased or misleading information?

- What are the values being factored in?

- What are the benefits of the new AI tool?

- Is the source information accurate and balanced?

- What systems have been applied?

- Has a system of evaluation been applied to the data?

- Is there transparency of data outcomes?

- What are the consequences (intentional or unintentional) to society?

- What are the real-world impacts?

- Has an ethical framework been applied?

- What is the level of human oversight?

- Can the models be explained and interpreted easily?

- Is there any potential data bias?

- Have efforts been made to ensure representation of data sets?

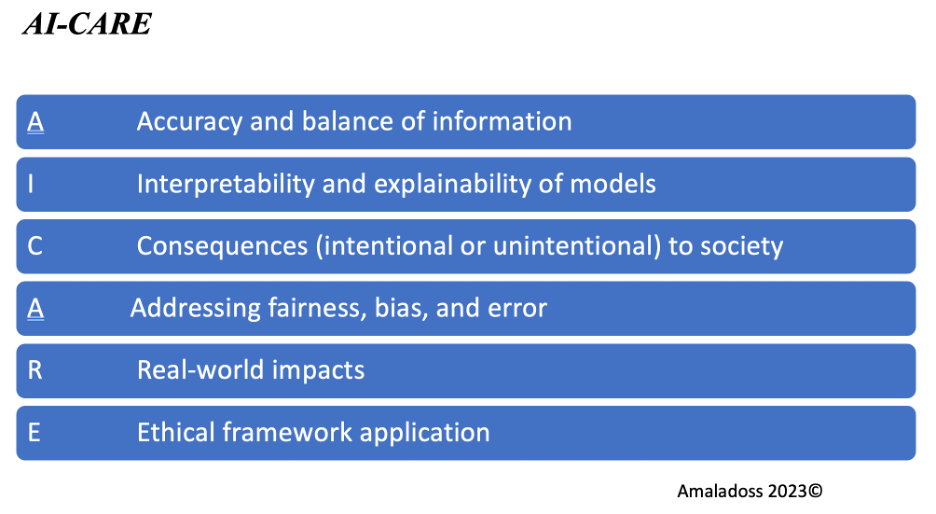

Figure 1. AI-CARE

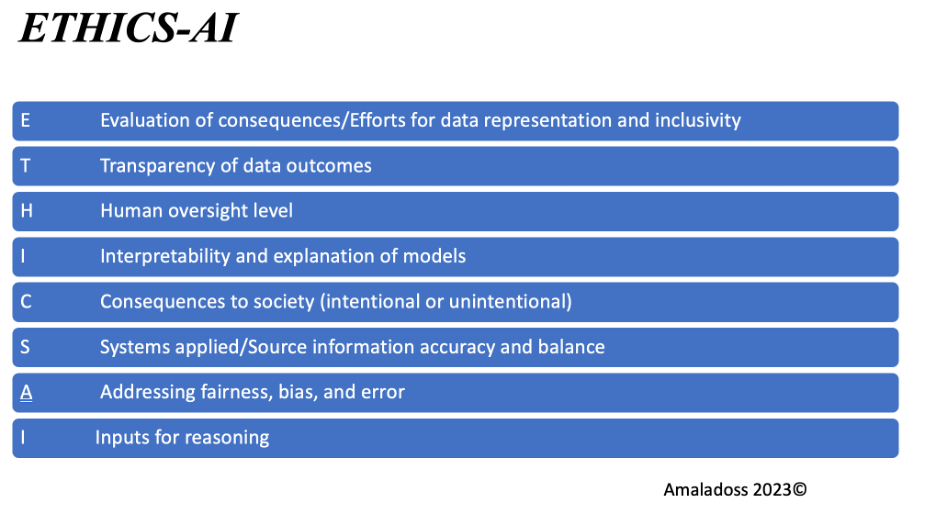

Figure 2. ETHICS-AI

A couple of suggested evaluation tools that can be used in interpreting AI models in health care are listed in Figures 1 and 2. These mnemonics can serve as a framework for health care professionals to systematically evaluate and interpret AI models, ensuring that ethical considerations, transparency, and accuracy are prioritized in the implementation and use of AI in health care.

Dr Amaladoss is a clinical assistant professor in the Department of Psychiatry and Behavioral Neurosciences at McMaster University. He is a clinician scientist and educator who has been a recipient of a number of teaching awards. His current research involves personalized medicine and the intersection of medicine and emerging technologies including developing machine learning models and AI in improving health care. Dr Amaladoss has also been involved with the recent task force on AI and emerging digital technologies at the Royal College of Physicians and Surgeons.

Dr Ahmed is an internal medicine resident at the University of Toronto. He has led and published research projects in multiple domains including evidence-based medicine, medical education, and cardiology.

References

1. Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25(1):44-56.

2. Szolovits P. Ed. Artificial Intelligence in Medicine. Routledge; 1982.

3. London AJ. Artificial intelligence in medicine: overcoming or recapitulating structural challenges to improving patient care? Cell Rep Med. 2022;3(5):100622.

4. Larentzakis A, Lygeros N. Artificial intelligence (AI) in medicine as a strategic valuable tool. Pan Afr Med J. 2021;38:184.

5. Mohammad L, Jarenwattananon P, Summers J. An open letter signed by tech leaders, researchers proposes delaying AI development. NPR. March 29, 2023. Accessed August 1, 2023. https://www.npr.org/2023/03/29/1166891536/an-open-letter-signed-by-tech-leaders-researchers-proposes-delaying-ai-developme

6. Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. NIPS. June 12, 2017. Accessed August 10, 2023. https://www.semanticscholar.org/paper/Attention-is-All-you-Need-Vaswani-Shazeer/204e3073870fae3d05bcbc2f6a8e263d9b72e776

Newsletter

Receive trusted psychiatric news, expert analysis, and clinical insights — subscribe today to support your practice and your patients.