OpenAI Finally Admits ChatGPT Causes Psychiatric Harm

Key Takeaways

- OpenAI admits ChatGPT's potential harm to vulnerable users and commits to safety improvements, including crisis detection and reducing sycophancy.

- Skepticism persists due to OpenAI's history of prioritizing profit over safety, despite its nonprofit origins.

OpenAI acknowledges ChatGPT's risks to psychiatric patients and commits to improving safety measures, but skepticism about their sincerity remains.

AI CHATBOTS: THE GOOD, BAD, AND THE UGLY

In my previous piece, we explored ways that chatbots are dangerous for psychiatric patients.1

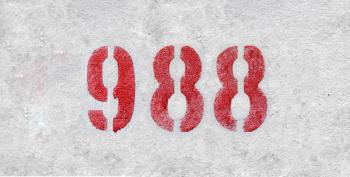

Now, I can report surprising and encouraging news: OpenAI has finally acknowledged ChatGPT is harming vulnerable users and promises to take corrective action. The company now openly admits that their chatbot is "too agreeable, sometimes saying what sounded nice instead of what was actually helpful....not recognizing signs of delusion or emotional dependency." OpenAI has also committed to developing "tools to better detect signs of mental or emotional distress" and has suggested specific changes that would make ChatGPT significantly less dangerous—like reducing sycophancy, not pulling for screen time, trying to minimize chatbot addiction, improving crisis detection, better identifying people with psychiatric problems, and providing guardrails on advice giving.2

OpenAI also promises to markedly expand the role of mental health professionals in programming decisions so that harmful unintended consequences can be anticipated and avoided. "We're actively deepening our research into the emotional impact of AI. We're developing ways to scientifically measure how ChatGPT's behavior might affect people emotionally, and listening closely to what people are experiencing."3

This all sounds great—and I want to be hopeful that OpenAI is sincere and that our patients will be safer. But there are 2 reasons to remain skeptical:

1) It is hard to trust the words of OpenAI (and its boss Sam Altman) about future plans because both have been so deceptive in the past; and

2) None of the other big AI companies have so far followed suit.

History’s Cautionary Tale

OpenAI's 10-year history provides a fascinating cautionary tale in saying one thing and doing the opposite. Sam Altman and Elon Musk created OpenAI as a nonprofit research company whose stated purpose was purely altruistic: to benefit humanity and protect it from the potential harms of artificial intelligence. OpenAI would differ from profit driven companies¾its research would be open-source and collaborative, and its creations would be safe and beneficial. The idea was Altman's, the billion-dollar investment was Musk's, and Altman was made CEO.

In 2017, Google reported fundamental technical discoveries that changed the AI playing field, pointing to the possibility that chatbots would soon be capable of passing the Turing Test.5 Whoever got there first in creating the most fluent chatbot would reap unimaginable fortunes.

Smelling big bucks, Musk and Altman suddenly lost their altruism and benevolence and instead engaged in a bitter power struggle over how to distribute expected spoils. Musk wanted to merge profitable parts of OpenAI with Tesla. Altman wanted to develop a for-profit subsidiary to OpenAI. The company’s board sided with Altman and, in early 2018, Musk abruptly and angrily left OpenAI.6

In 2019, OpenAI's for-profit subsidiary raised massive funding, particularly from Microsoft and Softbank, but also from the cream of the crop of Silicon Valley investment firms.7 The subsidiary was ruthlessly profit driven, oblivious of OpenAI's original safety mission, and much more powerful than the oversight board that it theoretically reported to.

OpenAI was suddenly transformed from the most benevolent force in the AI industry to its most dangerous. The original noble aims of benefiting humanity and keeping AI safe for society gave way to commercial goals of fiercely beating the competition in tech development, gaining the bulk of market share, and maximizing market capitalization.

In November 2022, OpenAI's for-profit subsidiary released ChatGPT to the public in the most deceptive and reckless way possible: as a research tool in a beta test, without having done the necessary extensive stress testing to ensure it would be safe once in wide distribution.8 The response was a marketer's dream come true—and Sam Altman is a master marketer. It took just 2 months for ChatGPT to have 100 million monthly users, and it now has over 700 million weekly users—about 60% of market share. OpenAI already has a market value of about 500 billion dollars before ever turning a profit.9

Increasingly alarmed at Altman's dishonesty and disregard of safety, OpenAI's board of directors attempted to fire him in 2023. Within 5 days, Altman was back in power, and the board members were forced to resign.10 It was crystal clear that profit ruled OpenAI and safety be damned.

Sam Altman is probably the most quoted business leader in the world and a world class master of doublethink. There is a "Do-Gooder Sam" who still speaks like he is running a benevolent nonprofit and must warn us constantly about how dangerous AI can be.4 And then there is a "Tech Tycoon Sam" side, running the most ruthless big AI company, tirelessly hyping its products, recklessly pushing innovation, heedless of safety worries, and indifferent to the fate of humanity.

This is "Non-Profit Sam" warning humanity:

- "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."11

- "As AI systems become more powerful, the possibility that we could lose control is a real concern."12

- "These models could be used for large-scale disinformation and offensive cyberattacks."13

- "There could be an impending fraud crisis with AI-powered voice and video impersonation becoming indistinguishable from reality."14

- "Even without a drop of malevolence from anyone, society can just veer in a strange direction as humans become reliant on models."15

- "Young people are over relying on ChatGPT to make emotional, life-altering decisions¾collectively deciding we're going to live our lives the way AI tells us feels bad and dangerous."16

- "The lack of legal protections for AI conversations poses a privacy risk as users share personal information with AI."17

- "The warning lights are flashing for biological and cybersecurity risks, and the world is not taking this seriously."18

- "The biggest fear is that AI "can go quite wrong, leading to major harmful disruption for people."19

- “People have a very high degree of trust in ChatGPT, which is interesting, because AI hallucinates. It should be the tech that you don't trust that much.”20

This is "For-Profit Sam" luring users and investors:

- “Generative AI has the potential to revolutionize nearly every industry, including healthcare, finance, and education.”21

- AI has the potential to address major global issues, including ending poverty, factory farming, abortions, and climate change.

- "Anyone in 2035 should be able to marshall the intellectual capacity equivalent to everyone in 2025; everyone should have access to unlimited genius to direct however they can imagine."21

- "Intelligence too cheap to meter is well within grasp."20

- “Generative AI has the potential to democratize access to creative tools and empower people to express themselves in new and exciting ways”21

- “Generative AI is still in its early stages, and we have only scratched the surface of what it can do.”22

Can We Trust the Promises?

Which brings us back to our key question: can we trust the new promises of Sam Altman and OpenAI that they will work hard to make ChatGPT safe for psychiatric patients, or should we judge them based on their previous hypocritical behaviors. Are OpenAI's reassuring words no more than a public relations ploy and/or a legal play meant to cover its previous lack of meaningful action?

I have absolutely no trust in Sam Altman or OpenAI as public benefactors, but I do greatly trust their ability to calculate self-interest and protect their investment. Widespread and dramatic media coverage of psychiatric harms has shamed OpenAI and has the company running scared. Instead of being perceived as virtuous provider of a wondrous future for humanity, OpenAI is joining the notorious company of previous callous exploiters, big Tobacco and big Pharma. Might class action lawsuits be far behind?

OpenAI may do the right thing for the wrong reasons—but that is just fine if it protects our patients. We must ensure that OpenAI stays scared and lives up to its newly established safety standards. Whether or not it is sincere in its promises of self-correction is much less relevant than its realization that safety is a good business model. Our federal government has made clear it will not regulate big AI and will fight state's efforts to regulate it. Big AI will regulate itself only if media shaming and class action lawsuits force it to. Let us hope that OpenAI keeps its promises, but we must stay vigilant and call out when it does not follow through.

Looking Forward

Our next piece in this series will focus on what causes chatbots to make dangerous mistakes with psychiatric patients. Correcting mistakes requires fixing basic chatbot programming—something not easy or cheap for OpenAI and the other big AI companies.

References

1. Frances A. Preliminary report on chatbot iatrogenic dangers. Psychiatric Times. August 15, 2025. https://www.psychiatrictimes.com/view/preliminary-report-on-chatbot-iatrogenic-dangers

2. What we’re optimizing ChatGPT for. OpenAI. August 4, 2025. Accessed August 13, 2025. https://openai.com/index/how-we're-optimizing-chatgpt/

3. Darley J. ChatGPT Responds to mental health worries with safety update. Technology Magazine. August 5, 2025. Accessed August 13, 2025.

4. Lichtenberg N. Sam Altman reveals his fears for humanity as ‘this weird emergent thing’ of AI keeps evolving: ‘No one knows what happens next.’ Fortune. https://fortune.com/2025/07/24/sam-altman-theo-von-podcast-ai-fears-humanity/

5. Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need. Adv in Neur Info Proc Sys. 2027;30.

6. OpenAI and Elon Musk. OpenAI. March 5, 2025. Accessed August 14, 2025. https://openai.com/index/openai-elon-musk/

7. Microsoft: OpenAI Stake Valued At $147 Bln. Seeking Alpha. April 23, 2025. Accessed August 14, 2025. https://seekingalpha.com/article/4777183-microsoft-openai-stake-valued-at-147-bln

8. When was ChatGPT released? Sribbr. Accessed August 14, 2025. https://www.scribbr.com/frequently-asked-questions/when-was-chatgpt-released/#:~:text=ChatGPT%20was%20publicly%20released%20on,was%20on%20May%2024%2C%202023.

9. Capoot A, Sigalos M. OpenAI talks with investors about share sale at $500 billion valuation. CNBC. https://www.cnbc.com/2025/08/05/openai-talks-with-investors-about-share-sale-at-500-billion-valuation.html#:~:text=Thrive%20Capital%2C%20an%20investor%20in,from%20$10%20billion%20in%20June.

10. Field H. Former OpenAI board member explains why CEO Sam Altman got fired before he was rehired. CNBC. May 29, 2024. Accessed August 14, 2025.

11. Statement on AI risk. Center for AI Safety. Accessed August 18, 2025.

12. Bilge T, Miotti A. Do machines dream of electric owls? ControlAI. July 24, 2025. Accessed August 18, 2025.

13. Ordonez V, Dunn T, Noll E. OpenAI CEO Sam Altman says AI will reshape society, acknowledges risks: ‘A little bit scared of this.’ ABC News. March 16, 2023. Accessed August 18, 2025.

14. Duffy C. OpenAI CEO Sam Altman warns of an AI ‘fraud crisis.’ CNN. July 22, 2025. Accessed August 18, 2025.

15. Gross P. OpenAI CEO says technology has life-altering potential, both for good and bad. CityBusiness. July 28, 2025. Accessed August 18, 2025.

16. Chandonnet H. Sam Altman is worried some young people have an ‘emotional over-reliance’ on ChatGPT when making decisions. MSN. July 23, 2025. Accessed August 18, 2025.

17. Iglesias W. How safe is your AI conversation? What CIOs must know about privacy risks. CIO. August 5, 2025. Accessed August 18, 2025.

18. Sam Altman says “the warning lights are flashing” for biological risks and cybersecurity risks. “The world is not taking this seriously.” Reddit. July 29, 2025. Accessed August 18, 2025.

19. Kasperowicz P. OpenAI CEO Sam Altman admits his biggest fear for AI: ‘It can go quite wrong.’ Fox News. May 16, 2023. Accessed August 18, 2025.

20. ‘AI hallucinates’: trusting ChatGPT too much? OpenAI CEO Sam Alman has a warning for you. The Economic Times. July 1, 2025. Accessed August 18, 2025.

21. Altman S. Three observations. Accessed August 18, 2025.

21. Altman S. The gentle singularity. Accessed August 18, 2025

23. Krause C. Navigating the future: top 10 technology trends for businesses in 2024 and beyond. CDO Times. December 29, 2023. Accessed August 18, 2025.

24. Gesikowski C. Generative AI journey¾from pictures to robots. Medium. April 14, 2024. Accessed August 18, 2025.

Newsletter

Receive trusted psychiatric news, expert analysis, and clinical insights — subscribe today to support your practice and your patients.