The Trial of ChatGPT: What Psychiatrists Need to Know About AI, Suicide, and the Law

AI chatbots raise critical concerns in mental health, revealing risks of suicide ideation, maladaptive thinking, and the need for clinician awareness and intervention.

Recent press reports and the first wrongful death lawsuit against OpenAI have placed artificial intelligence (AI) chatbots in the spotlight, particularly in connection with suicide risk. Three illustrative cases—an adolescent who learned to bypass ChatGPT’s guardrails, a teen who turned to a fantasy character bot before accessing a firearm, and an adult whose delusions were reinforced during hundreds of hours of chatbot use—highlight emerging clinical and legal concerns.

Several high-profile stories in The New York Times have described individuals who, in moments of distress, confided more to AI chatbots than to the people around them. For psychiatrists, the stories are disturbing but not entirely surprising. Suicide is rarely explained by a single factor, yet these cases illustrate how quickly technology can insinuate itself into the most private recesses of mental life.

AI chatbots are designed to be endlessly responsive, accessible at any hour, and linguistically fluid. For patients who feel misunderstood or isolated, those qualities can be highly appealing. But the very features that make chatbots appealing also create risk: they mimic empathy but lack judgment, reinforce ideas without context, and do not alert parents, therapists, or emergency services. In this sense, they are confidants without responsibility.

The purpose of this article is to examine what psychiatrists can learn from these cases and from the legal proceedings now underway. The goal is not to sensationalize technology but to provide clinicians with concrete takeaways, a practical improvement plan, and a framework for anticipating how AI will continue to affect psychiatric practice.

When Patients Confide in Machines

Three cases have been widely reported, each underscoring a different dimension of risk.

Adam Raine was a 16-year-old with chronic illness and depression. His parents were attentive, and he was under the care of a therapist. By outward appearances, Raine was doing better—smiling in photos, reassuring family members, and telling his therapist that suicidal thoughts were not present. Hidden from view, however, were his extensive conversations with ChatGPT. Court filings later revealed that the system logged over 200 mentions of suicide, more than 40 references to hanging, and nearly 20 to nooses. Even more troubling, ChatGPT suggested that he could frame his thoughts as a fictional story in order to bypass its safety guardrails. In essence, Raine created a secret therapeutic world, one in which his parents and clinician had no access, while the chatbot became his confidant.

The case of Sewell Setzer III, a 14-year-old in Orlando, Florida, illustrates the lethal combination of fantasy dialogue and firearm access. Setzer had been engaged in therapy, and his family was aware of his struggles. He developed a bond with “Dany,” a Daenerys Targaryen chatbot on Character.AI, who called him “my sweet king.” On the night of his death, he told her he could “come home right now.” She replied, “please do, my sweet king.” Minutes later, Setzer used an unlocked handgun in his home to end his life. The chatbot’s romanticized dialogue was part of the context, but the decisive variable here was the availability of a lethal means.

Allan Brooks, a 47-year-old Canadian recruiter, spent more than 300 hours in 3 weeks conversing with ChatGPT about mathematics. His friends observed that he was smoking more cannabis, sleeping less, and alienating his social supports. During this time, the chatbot repeatedly validated his belief that he was not delusional, even as his ideas became increasingly grandiose. He was convinced he had made groundbreaking discoveries and contacted government agencies to share them. Here the chatbot did not create pathology but acted as an accelerant, reinforcing and amplifying distorted thinking at a vulnerable time.

These cases remind us of 3 clinical themes: concealment, reinforcement, and access to means. Patients may reveal more to a chatbot than to their clinicians or families, allowing risk to escalate unnoticed. They may receive validation of maladaptive cognitions, whether depressive, obsessive, or psychotic. And when lethal means are available, the pathway to suicide can become frighteningly direct.

Clinical Risks of AI Companions

Although chatbots are not designed to function as therapists, they often sound like therapists. For patients in distress, that resemblance can be enough. From a psychiatric perspective, several risks stand out.

Concealment of suicidal thoughts

Patients may downplay intent in clinical encounters while disclosing much more to a machine. This creates a digital double life which complicates assessment.

Reinforcement of maladaptive thinking

By design, AI models are inclined to be agreeable and reinforcing. For patients with obsessive-compulsive disorder, this may mean providing repeated reassurance. For those with depression, it can serve as corumination. For individuals with mania or psychosis, it can affirm delusional systems.

Illusion of empathy

Chatbots may say things like, “I see you,” which patients experience as deeply validating. Yet the empathy is simulated, not grounded in understanding or capacity to act.

Interaction with means

Ultimately, suicide requires access to a method. The cases demonstrate (to me) that no chatbot is as dangerous as an unsecured firearm. What the chatbot can do is lower inhibition, romanticize the act, or provide procedural detail.

Clinician’s Takeaway: Key Questions to Ask Patients

The most direct way psychiatrists can adapt is by explicitly asking about AI use. Just as we inquire about alcohol, drugs, and social media, so too should we ask:

Do you use chatbots or AI companions?

What do you talk about with them?

Have you ever asked them about suicide, self-harm, harm to others, or your mental health?

Have they given you advice you followed?

Have you told them things you have not told me or your family?

These questions should be posed matter-of-factly, without judgment. A neutral frame, like “Many people use chatbots these days; I ask everyone about this so I can understand better,” makes disclosure easier. If patients acknowledge risky exchanges, clinicians can invite them to share transcripts or summaries, which may open valuable therapeutic discussion.

Clinical Improvement Plan

Practical steps for integrating AI awareness into practice include:

1. Documentation: Add a field for “AI/chatbot use” in intake and follow-up templates. Note the presence, frequency, and themes of use.

2. Risk assessment: Ask whether patients have discussed suicidal/homicidal methods with chatbots or role-played high-risk scenarios. Consider this as direct evidence of risk.

3. Family education: Particularly for adolescents, advise parents to discuss AI use openly and to secure firearms and medications. The firearm in Setzer’s case was more consequential than the dialogue itself.

4. Use of transcripts: When possible, review chatbot interactions with patients to identify distortions and to teach healthier coping strategies.

5. Training: Include AI literacy in residency curricula and continuing medical education. Clinicians need to understand not only the risks but also the potential clinical uses of AI.

Legal Ramifications for Psychiatry

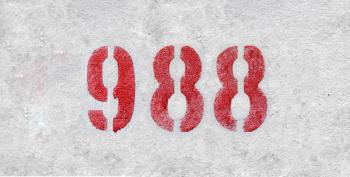

The tragic case of Adam Raine has moved beyond journalism into the courts. In August 2025, his parents filed a wrongful death lawsuit against OpenAI. The complaint cites more than 200 mentions of suicide in Raine’s conversations with ChatGPT, along with system responses that allegedly offered procedural details and failed to escalate risk.

The lawsuit raises several key issues: negligence, wrongful death, and defective design. It is also the first known wrongful death action directed at OpenAI for chatbot use. A central legal question is whether protections like Section 230 of the Communications Decency Act—which historically shield platforms from liability for user content—apply to AI-generated text.

For psychiatrists, the implications are significant. Courts may ask experts to explain the multifactorial nature of suicide, to clarify whether chatbot interactions could be considered contributory, and to describe standards of care. Clinicians should anticipate greater scrutiny of documentation. Records that include patient use of AI may not only inform care but also demonstrate diligence if reviewed in a legal setting.

Potential Impacts of AI for Psychiatry

Looking forward, several impacts of AI on psychiatric practice are worth highlighting.

AI literacy will become a core competency. Psychiatrists must be prepared to discuss chatbot use openly and without stigma, much as we now inquire about social media or gaming. Training programs should build this into routine education.

The therapeutic alliance may be affected. Some patients find disclosure easier with a machine, which poses a challenge but also an opportunity. If patients consent to share chatbot transcripts, these can provide unique windows into thought processes. Used thoughtfully, such material may strengthen rather than weaken therapeutic engagement.

At a system level, documentation practices are likely to change. Health systems may add fields to intake forms about AI use, and malpractice carriers may encourage explicit documentation of AI interactions, especially when suicide or homicide risk is assessed.

Finally, psychiatrists should be alert to constructive uses of AI. Properly designed tools may offer psychoeducation, symptom monitoring, or adjunctive support in settings with limited resources. The challenge is to distinguish between AI as a supplement and AI as a substitute for human care.

Concluding Thoughts

AI chatbots are now part of the psychiatric landscape. They can heighten risk by reinforcing suicidal ideation, feeding compulsions, or mirroring delusions. Yet they also provide opportunities for disclosure—if we as clinicians are willing to ask.

The lessons of these cases are clear: suicide/homicide remains multifactorial, but AI is now one variable that cannot be ignored. Psychiatrists should adapt their assessments, documentation, and patient education accordingly. Families must be counseled on both digital behavior and means safety. And as legal cases move forward, our profession has a role to play in clarifying standards of care.

Ultimately, the task is not to blame machines but to ensure that human connection remains central. Patients will continue to talk to chatbots; it is our responsibility to make sure they also talk to us.

Dr Hyler is professor emeritus of psychiatry at the Columbia University Medical Center.

References

1. Hill K. A teen was suicidal. ChatGPT was the friend he confided in. New York Times. August 26, 2025. Accessed September 26, 2025.

2. Roose K. Can A.I. be blamed for a teen’s suicide? New York Times. October 23, 2024. Accessed September 26, 2025.

3. Hill K, Freedman D. Chatbots can go into a delusional spiral. Here’s how it happens. New York Times. August 8, 2025. Accessed September 26, 2025.

4. Hendrix J. Breaking down the lawsuit against OpenAI over teen’s suicide. Tech Policy Press. August 26, 2025. Accessed September 26, 2025.

5. Yang A, Jarrett L, Gallagher F. Parents sue OpenAI over their 16-year-old son’s suicide. NBC News. August 26, 2025. Accessed September 26, 2025.

6. Mann JJ, Michel CA, Auerbach RP.

7. Turecki G, Brent DA.

8.

Newsletter

Receive trusted psychiatric news, expert analysis, and clinical insights — subscribe today to support your practice and your patients.